| Note: This tutorial assumes that you have completed the previous tutorials: Moving the Arm. |

| |

Grasping an Object

Description: This tutorial shows how to detect an object using ar_kinect and then grasp the object using simple_arm_server.Tutorial Level: INTERMEDIATE

Contents

Overview

There are several steps to this tutorial. First, we will explore how to use AR (augmented reality) markers to skip having to write perception code. We then show how to find a block using the ar_kinect package and tf. Finally, we'll command the arm to pick up the block from the command line.

Creating Some AR Blocks

The first step in this tutorial will be to apply some AR markers to your blocks. For starters, print out the PDF of marker #9. This marker should be cut out and attached to a wooden block, like this:

Bringup

Once you have started Mini Maxwell, you will still need to bring up the arm control:

roslaunch mini_max_defs simple_arm_server.launch

Localizing Blocks

To localize the blocks, we will use the ar_kinect package. This package provides a node which looks for AR markers in a Kinect point cloud and then outputs their locations. This package uses the ARtoolkit internally, but adds some Point Cloud processing to get better results (especially with small markers) than could be achieved with the RGB camera alone.

There are a number of configuration parameters which we will cover later, for now, we will use a launch file which has all these parameters already defined for marker #9:

roslaunch ar_kinect ar_kinect.launch

I recommend placing a block under Mini Max's neck to support the head without consuming power. Place your AR-tagged block in front of Mini Max like this:

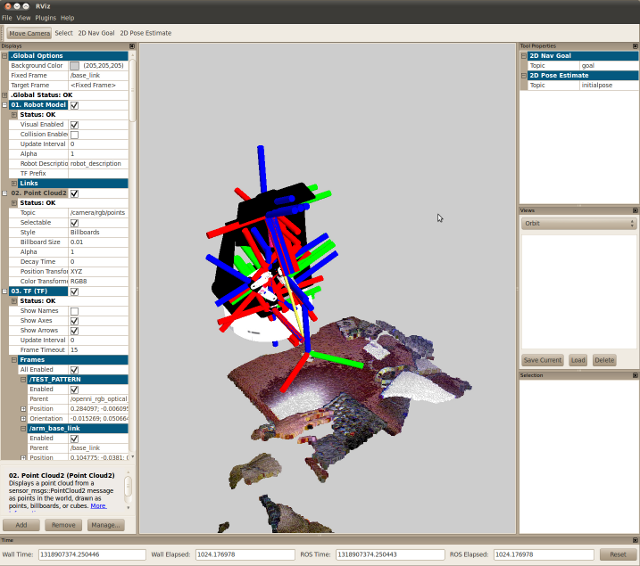

With RVIZ, you can add a TF display (it is recommended to turn off the display of names, or your view will be quite crowded):

As you can see, ar_kinect is outputting a TF frame located on the block, if you move the block around, you will see the frame move with it.

We can now find out where the block is by tracking the TF frame associated with it. To find the name of this TF frame, we can look at the launch file we used earlier:

<launch>

<node name="ar_kinect" pkg="ar_kinect" type="ar_kinect" output="screen">

<param name="marker_pattern_list" type="string" value="$(find ar_kinect)/data/objects_kinect"/>

<param name="marker_data_directory" type="string" value="$(find ar_pose)"/>

<param name="threshold" type="int" value="100"/>

</node>

</launch>So, the ar_kinect node is using a parameter called marker_patter_list which is loaded from a file in the ar_kinect/data folder. It's contents look like:

#the number of patterns to be recognized 1 #pattern 1 TEST_PATTERN data/4x4/4x4_9.patt 80.0 0.0 0.0

This file specifies that we can recognize 1 pattern, called TEST_PATTERN (the name is also the name of the TF frame). The rest of the parameters aren't relevant now, if you want to find out more about them, see the documentation for the ar_pose package, from which ar_kinect is derived.

To locate the block, we can find the transformation from the TEST_PATTERN frame to the base_link frame using a command line tool from tf:

$ rosrun tf tf_echo base_link TEST_PATTERN

At time 1318907232.477

- Translation: [0.285, -0.021, 0.019]

- Rotation: in Quaternion [0.037, -0.060, -0.635, 0.769]

in RPY [0.133, -0.045, -1.383]

At time 1318907232.945

- Translation: [0.285, -0.021, 0.019]

- Rotation: in Quaternion [0.035, -0.044, -0.634, 0.771]

in RPY [0.111, -0.023, -1.378]

^COnce you see a valid transformation, you can CTRL-C the program to stop it. We'll ignore the rotation aspects of the transformation for now and focus on the translation. The translation above says that the block is 0.285 meters in the X direction from base_link, which means that the block is 0.285 meters in front of the center of the robot. It is -0.021m in the Y direction, or 0.021m to the right of the center of the robot (by convention in ROS, Y always points to the left on base_link). Finally, it is 0.019m above the floor.

Commanding the Arm

We can now use the position found from tf as a command to the simple_arm_server as we did in the previous tutorial, Moving the Arm:

$ rosrun simple_arm_server simple_arm_server_test.py 0.285 -0.021 0.019 1.57

Here we have specified the pitch angle to be 1.57 radians, or 90 degrees. The gripper should end up facing nearly downwards. The simple_arm_server will attempt to find the closest solution possible and so it may not be exactly 90 degrees.

The arm may not reach the block exactly, depending on the calibration of your Mini Maxwell. When you are satisfied that the arm has done its job, you should tuck the arm to prevent it from overheating:

rosrun mini_max_apps tuck_arm.py

Putting it All Together (in Python)

Our test program will have several parts:

- Capturing the block position (through TF)

- Sending that position, and a gripper opening, to the simple_arm_server

- Retracting the arm with the block grasped.

TF Lookup

The first thing we have to do is create a tf listener that will subscribe to tf data coming out of various nodes (including ar_kinect). Since tf transforms are based on a buffer of transform data, we want to wait a few seconds for the buffer to fill up. In normal operation, you'll often be performing lookups in loop, and so you won't need this wait (but don't forget the try/except):

Now that we have a tf listener with data we can ask for a transform. We will now look up the transform from base_link to TEST_PATTERN. The rospy.Time(0) simply says to find the latest transformation:

If this call succeeds x, y and z will contain the location of our AR block.

Grasping The Block

Grasping the block is very similar to the goals we produced in the previous tutorial, Moving the Arm. The main difference is that now we will add some gripper commands. We will add a command at the beginning of the goal to open the gripper, and another at the end to close it.

1 # create a new goal

2 goal = MoveArmGoal()

3 goal.header.frame_id = "base_link"

4

5 # open gripper, to 4cm, allow 1 second

6 action1 = ArmAction()

7 action1.type = ArmAction.MOVE_GRIPPER

8 action1.command = 0.04

9 action1.move_time = rospy.Duration(1.0)

10 goal.motions.append(action1)

11

12 # move to block location

13 action2 = ArmAction()

14 # this action will move the arm to a position

15 action2.type = ArmAction.MOVE_ARM

16 # assign an xyz position

17 action2.goal.position.x = x

18 action2.goal.position.y = y

19 action2.goal.position.z = z

20 # assign an orientation, angle the gripper down

21 q = quaternion_from_euler(0, 1.57, 0, 'sxyz')

22 action2.goal.orientation.x = q[0]

23 action2.goal.orientation.y = q[1]

24 action2.goal.orientation.z = q[2]

25 action2.goal.orientation.w = q[3]

26 # move from current position to new one in 3.0 seconds

27 action2.move_time = rospy.Duration(3.0)

28 goal.motions.append(action2)

29

30 # close gripper, to 1", allow 1 second

31 action3 = ArmAction()

32 action3.type = ArmAction.MOVE_GRIPPER

33 action3.command = 0.0254

34 action3.move_time = rospy.Duration(1.0)

35 goal.motions.append(action3)

Retracting the Arm

We can add an additional stop on our goal, to retract the arm (to a pose similar to the un-tucked position):

1 # move to retracted position

2 action4 = ArmAction()

3 # this action will move the arm to a position

4 action4.type = ArmAction.MOVE_ARM

5 # assign an xyz position

6 action4.goal.position.x = 0.15

7 action4.goal.position.y = 0.07

8 action4.goal.position.z = 0.16

9 # assign an orientation, angle the gripper down

10 q = quaternion_from_euler(0, 1.57, 0, 'sxyz')

11 action4.goal.orientation.x = q[0]

12 action4.goal.orientation.y = q[1]

13 action4.goal.orientation.z = q[2]

14 action4.goal.orientation.w = q[3]

15 # move from current position to new one in 3.0 seconds

16 action4.move_time = rospy.Duration(3.0)

17 goal.motions.append(action4)

The Complete Code

1 #!/usr/bin/env python

2

3 # typical imports

4 import roslib; roslib.load_manifest('simple_arm_server')

5 import rospy, actionlib

6

7 # import TF helpers

8 import tf

9 from tf.transformations import quaternion_from_euler

10

11 # import MoveArm action messages

12 from simple_arm_server.msg import *

13

14 # start a node

15 rospy.init_node('grasp_block')

16

17 # create an action client

18 client = actionlib.SimpleActionClient('move_arm', MoveArmAction)

19 client.wait_for_server()

20

21 # create tf listener

22 listener = tf.TransformListener()

23

24 # pause 5.0 seconds for tf buffer to fill

25 rospy.loginfo("Node Initialized, sleeping...")

26 rospy.sleep(5.0)

27

28 # transform point

29 try:

30 ((x,y,z), rot) = listener.lookupTransform('base_link', 'TEST_PATTERN', rospy.Time(0))

31 rospy.loginfo("Transform: (" + str(x) + "," + str(y) + "," + str(z) + ")")

32 except (tf.LookupException, tf.ConnectivityException):

33 rospy.logerr("Transform failed.")

34

35 # create a new goal

36 goal = MoveArmGoal()

37 goal.header.frame_id = "base_link"

38

39 # open gripper, to 4cm, allow 1 second

40 action1 = ArmAction()

41 action1.type = ArmAction.MOVE_GRIPPER

42 action1.command = 0.04

43 action1.move_time = rospy.Duration(1.0)

44 goal.motions.append(action1)

45

46 # move to block location

47 action2 = ArmAction()

48 # this action will move the arm to a position

49 action2.type = ArmAction.MOVE_ARM

50 # assign an xyz position

51 action2.goal.position.x = x

52 action2.goal.position.y = y

53 action2.goal.position.z = z

54 # assign an orientation, angle the gripper down

55 q = quaternion_from_euler(0, 1.57, 0, 'sxyz')

56 action2.goal.orientation.x = q[0]

57 action2.goal.orientation.y = q[1]

58 action2.goal.orientation.z = q[2]

59 action2.goal.orientation.w = q[3]

60 # move from current position to new one in 3.0 seconds

61 action2.move_time = rospy.Duration(3.0)

62 goal.motions.append(action2)

63

64 # close gripper, to 1", allow 1 second

65 action3 = ArmAction()

66 action3.type = ArmAction.MOVE_GRIPPER

67 action3.command = 0.0254

68 action3.move_time = rospy.Duration(1.0)

69 goal.motions.append(action3)

70

71 # move to retracted position

72 action4 = ArmAction()

73 # this action will move the arm to a position

74 action4.type = ArmAction.MOVE_ARM

75 # assign an xyz position

76 action4.goal.position.x = 0.15

77 action4.goal.position.y = 0.07

78 action4.goal.position.z = 0.16

79 # assign an orientation, angle the gripper down

80 q = quaternion_from_euler(0, 1.57, 0, 'sxyz')

81 action4.goal.orientation.x = q[0]

82 action4.goal.orientation.y = q[1]

83 action4.goal.orientation.z = q[2]

84 action4.goal.orientation.w = q[3]

85 # move from current position to new one in 3.0 seconds

86 action4.move_time = rospy.Duration(3.0)

87 goal.motions.append(action4)

88

89 # execute request

90 try:

91 client.send_goal(goal)

92 client.wait_for_result()

93 print client.get_result()

94 except rospy.ServiceException, e:

95 print "Service did not process request: %s"%str(e)