| |

Extrinsic Calibration of Camera(s) to a Static Target (Deprecated)

Description: This tutorial will explain how to use the industrial_extrinsic_cal package to calibrate one or two cameras to a checkerboard or circle grid target. If using two cameras, they both should have the same target in their field of view. If using the unchanged repository provided yaml files for parameters, then you will need at least one Asus/Kinect cameras and a checkerboard (12x12 square checkerboard (11x11 points)) or circle grid (5x7 circles).Keywords: Extrinsic Calibration Camera Target

Tutorial Level:

Overview

The industrial_extrinsic_cal package currently has one main Calibration Job library, one test ROS node, and one service node. The specific observer implemented is of a class ROSCameraObserver which takes a rostopic and uses OpenCV algorithms to find the pattern, and return the (x,y) location of the points as the set of observations. Thus this tutorial only applies for cameras which can publish a rostopic. The parameters in the camera.yaml file are specific to an Asus/Kinect. The parameters within the target.yaml file are specific to a circle grid of 5 x 7 25-mm circles spaced 35 mm apart.

There are several options for running this tutorial (different combinations of yaml files) and this tutorial will include two combinations of the currently available options.

Required Materials

Make sure you've installed all the dependencies per the wiki and feel free to become familiar with the specifics in each launch/yaml file.

Targets

There are currently 7 target definitions in the yaml directory. The targets that will be used in this tutorial are defined by "test2_target_def.yaml" and "circlegrid5x7_target_def.yaml". The test2 target is a Checkerboard which has a grid of 12 by 12 squares which are at 0.040 m spacing. There are therefore 11x11 or 121 points on this grid. The circle grid target can be printed on an 8.5x11 standard letter sized paper. This grid can be found in the targets directory. The grid has 35 circles and will need to be oriented with the length axis of the paper nearly vertical (will visually be 7 rows and 5 columns which translates to 5 points per row and 7 points per column in OpenCV). Also "circlegrid7x5_target_def.yaml" is available if you want to lay the paper/pattern horizontally.

Camera(s)

The two options in this tutorial are currently 1 camera (and 1 robot model, 1 target) or 2 cameras (no robot, 1 target). The type of cameras currently supported are the openni compatible type like the Asus Xtion Pro or the Microsoft Kinect (for Xbox 360).

Highly Recommended: Perform Intrinsic Calibration

While not required, if your camera's intrinsic parameters are different from the default values, your alignment will be affected. The calibration routine uses a camera definition file which contains the intrinsic parameters which are used in calculating the error between the camera's observed points and the targets known points. These intrinsic paramteres have a direct effect on the extrinsic calibration:

- focal_length_x: 525

- focal_length_y: 525

- center_x: 320

- center_y: 240

- distortion_k1: 0.01

- distortion_k2: 0.02

- distortion_k3: 0.03

- distortion_p1: 0.01

- distortion_p2: 0.01

It is therefore highly recommended that you perform an intrinsic calibration and update these values for your camera.

Optional: Robot/Model

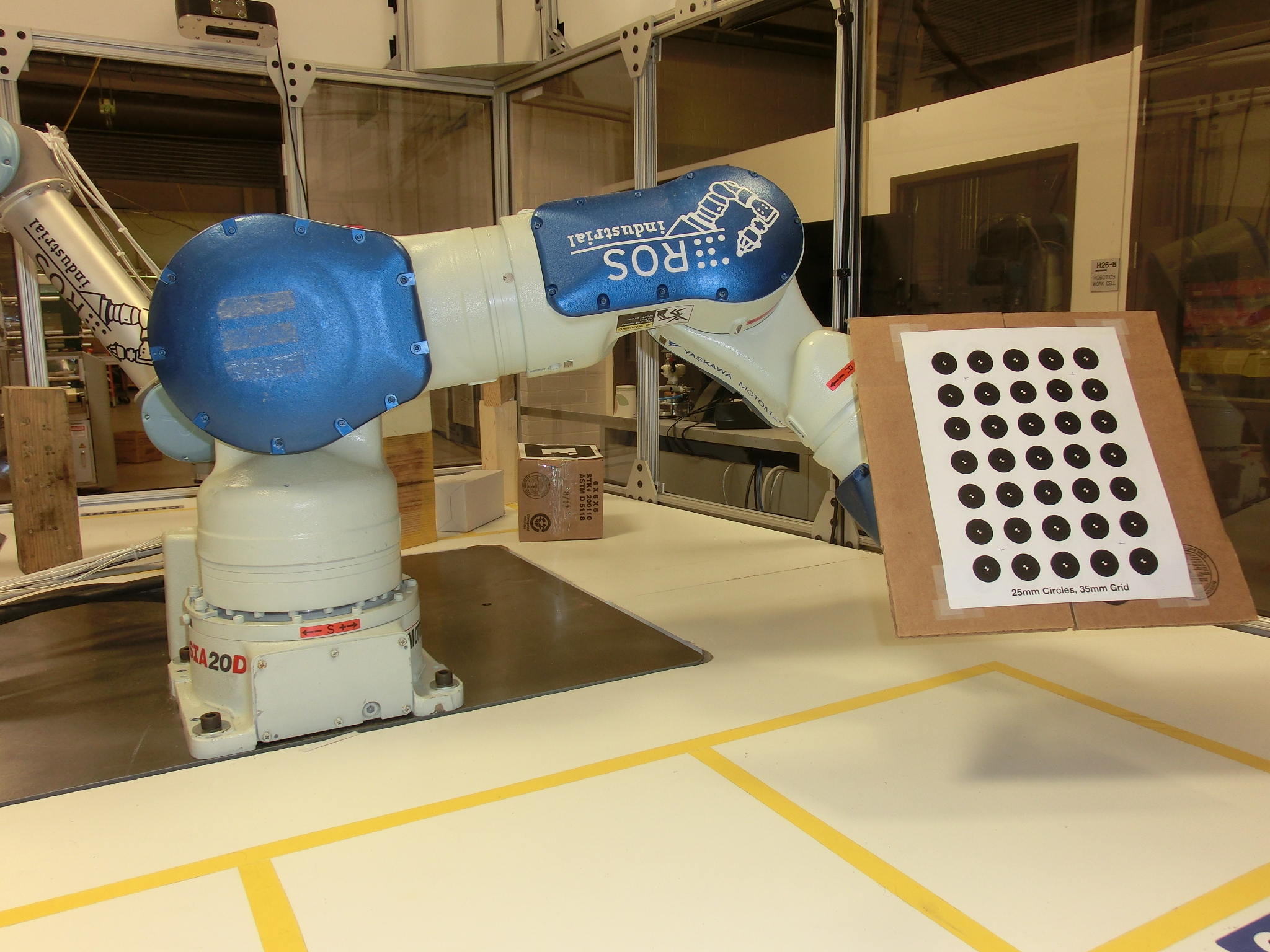

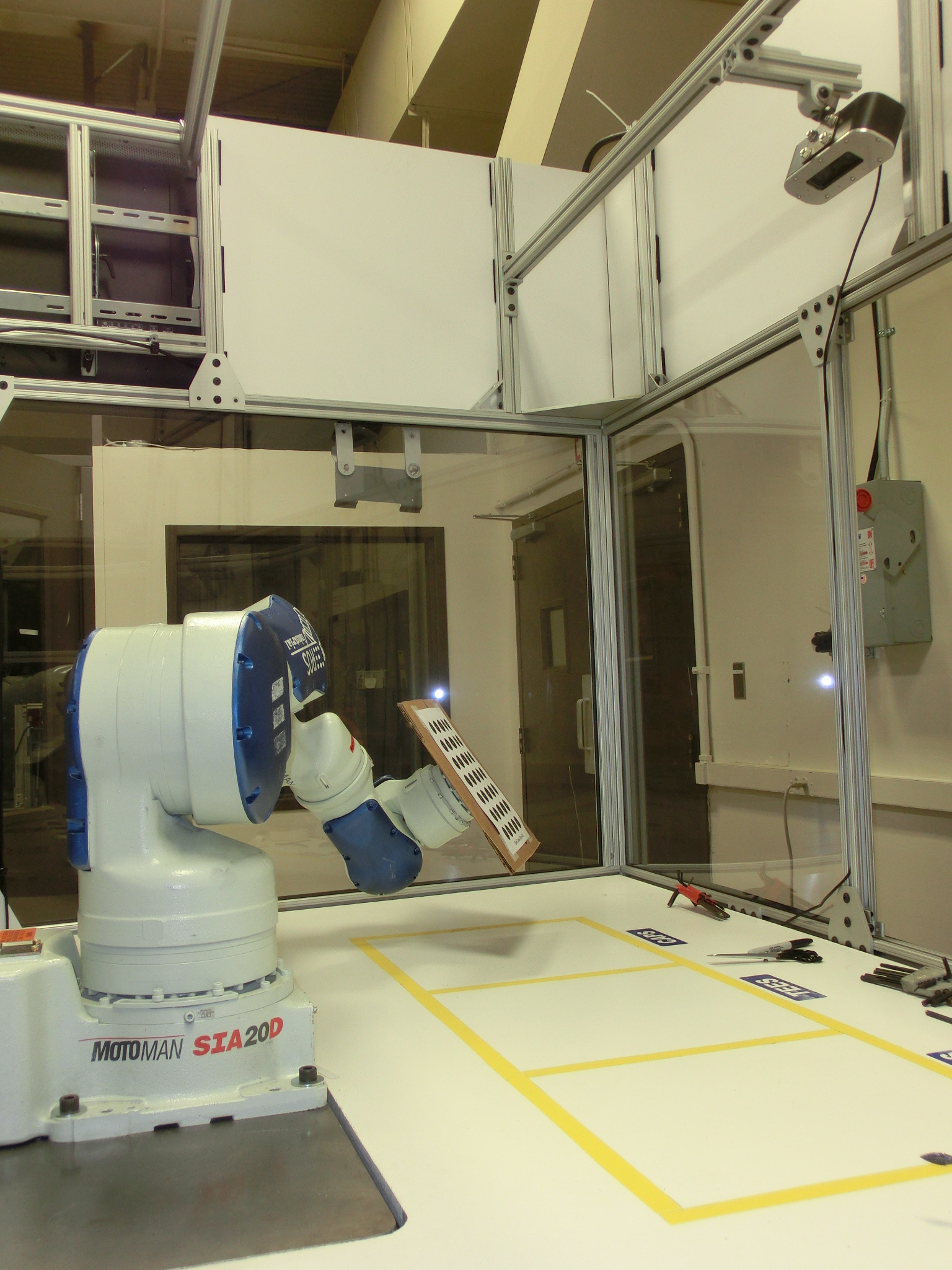

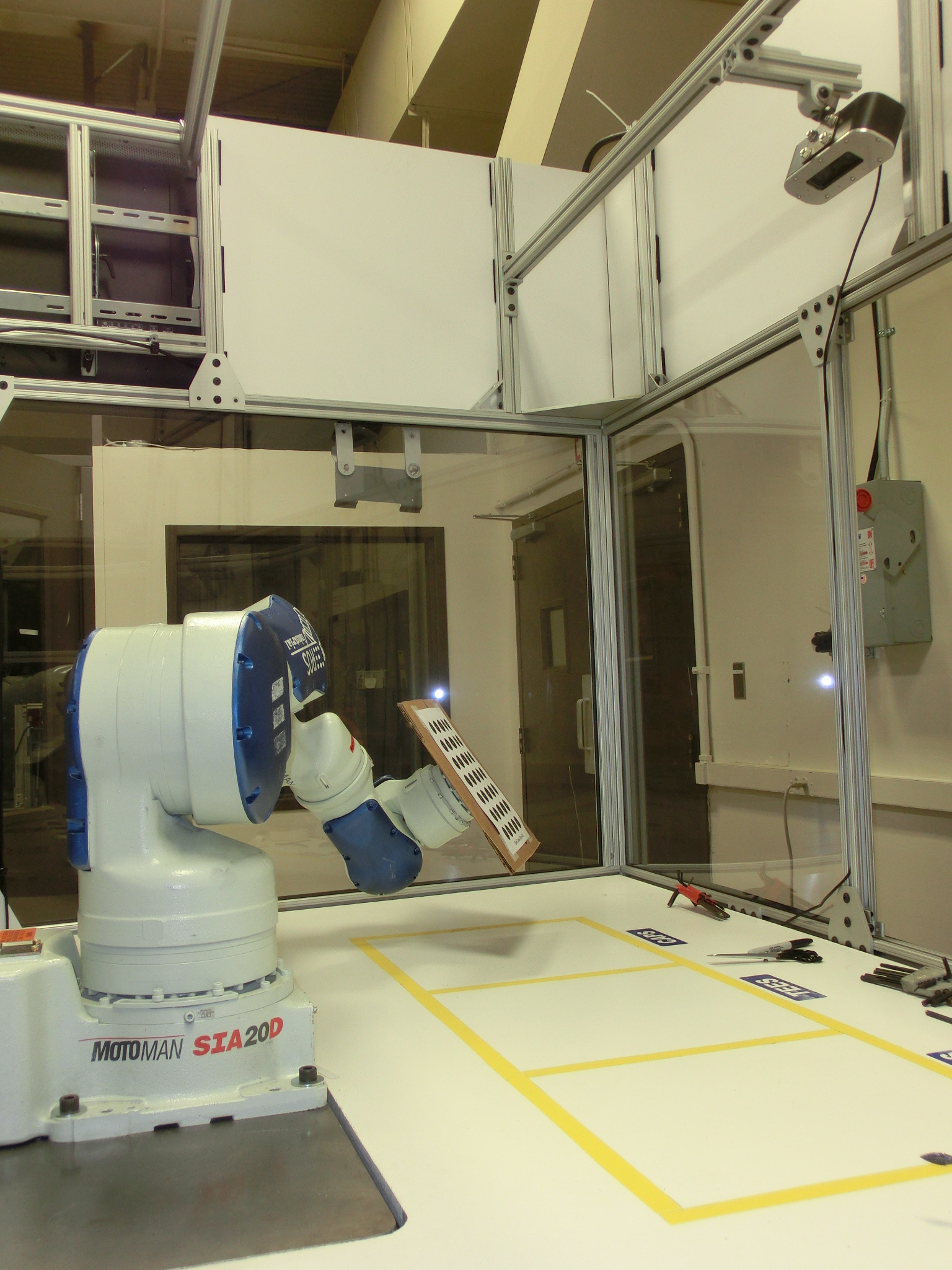

If running this tutorial with a robot, you will need to be able to launch the robot model. This can be done either in simulation mode or live mode. The example here is run with a Motoman SIA20d robot in live mode (no motion, only getting current state of joints).

Optional: Calibration Plate

See section 7.4 for specific instructions on using the calibration plate (plate attaches to robot arm and has alignment holes for circle grid target).

Physical Setup

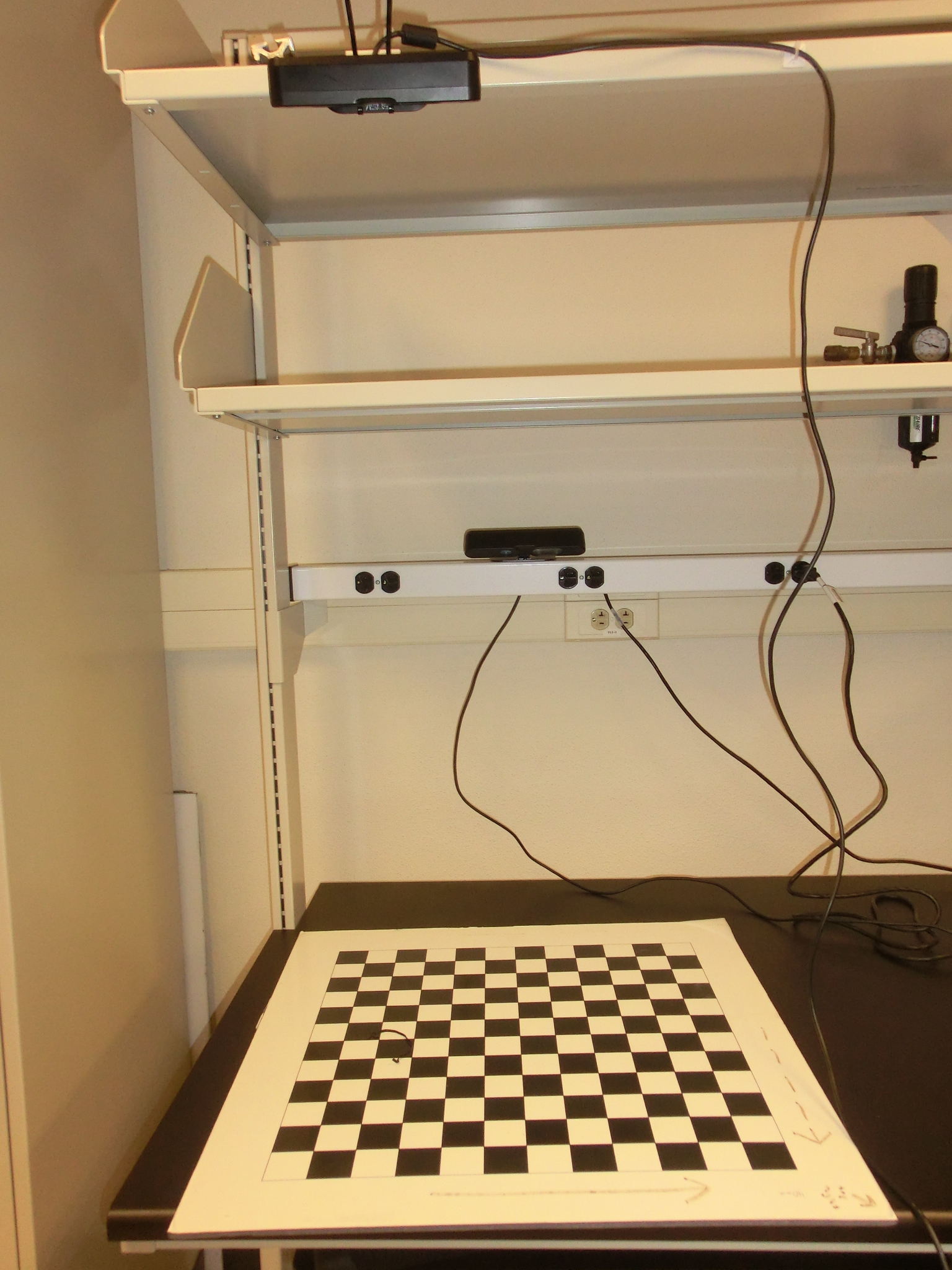

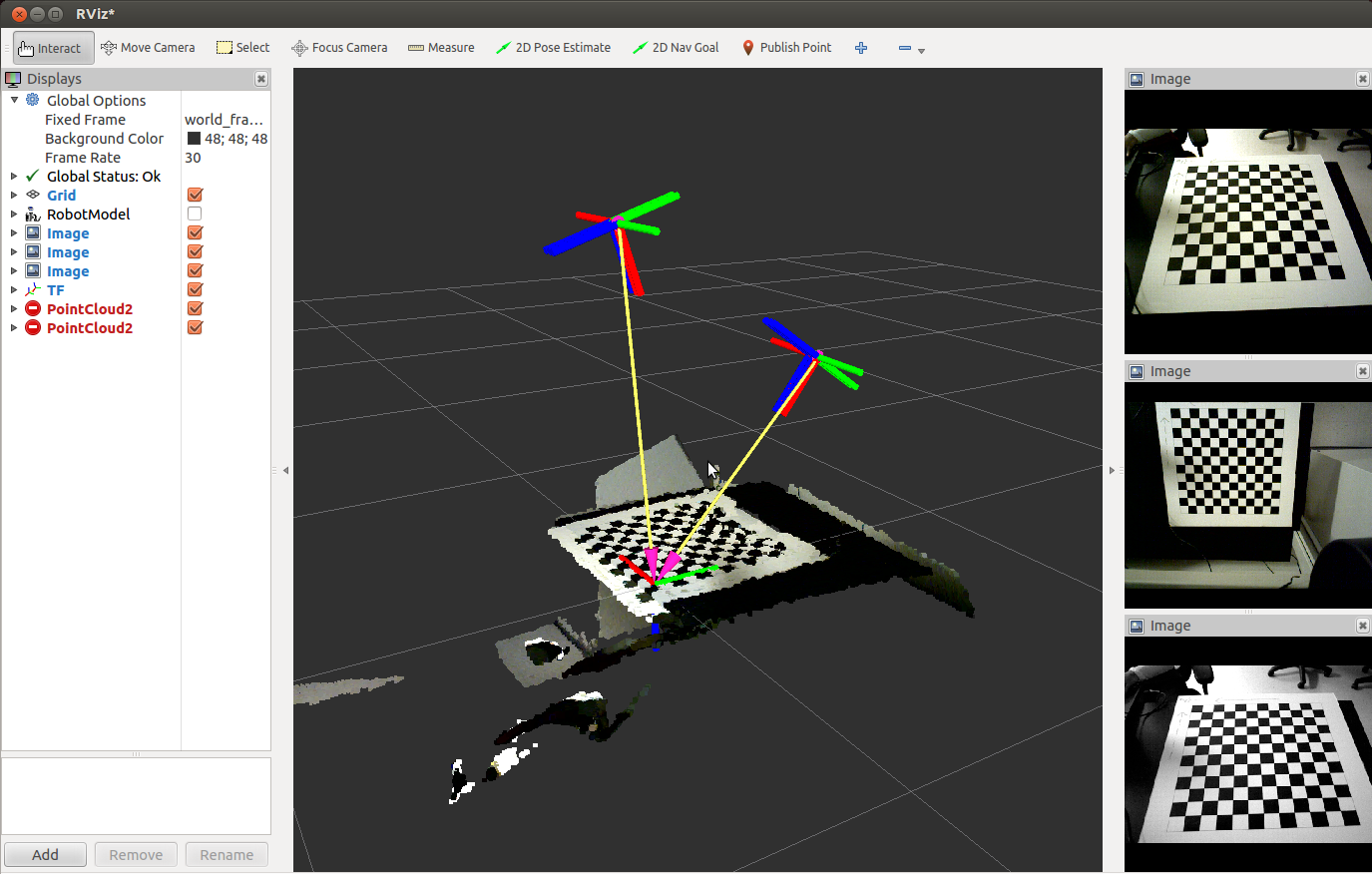

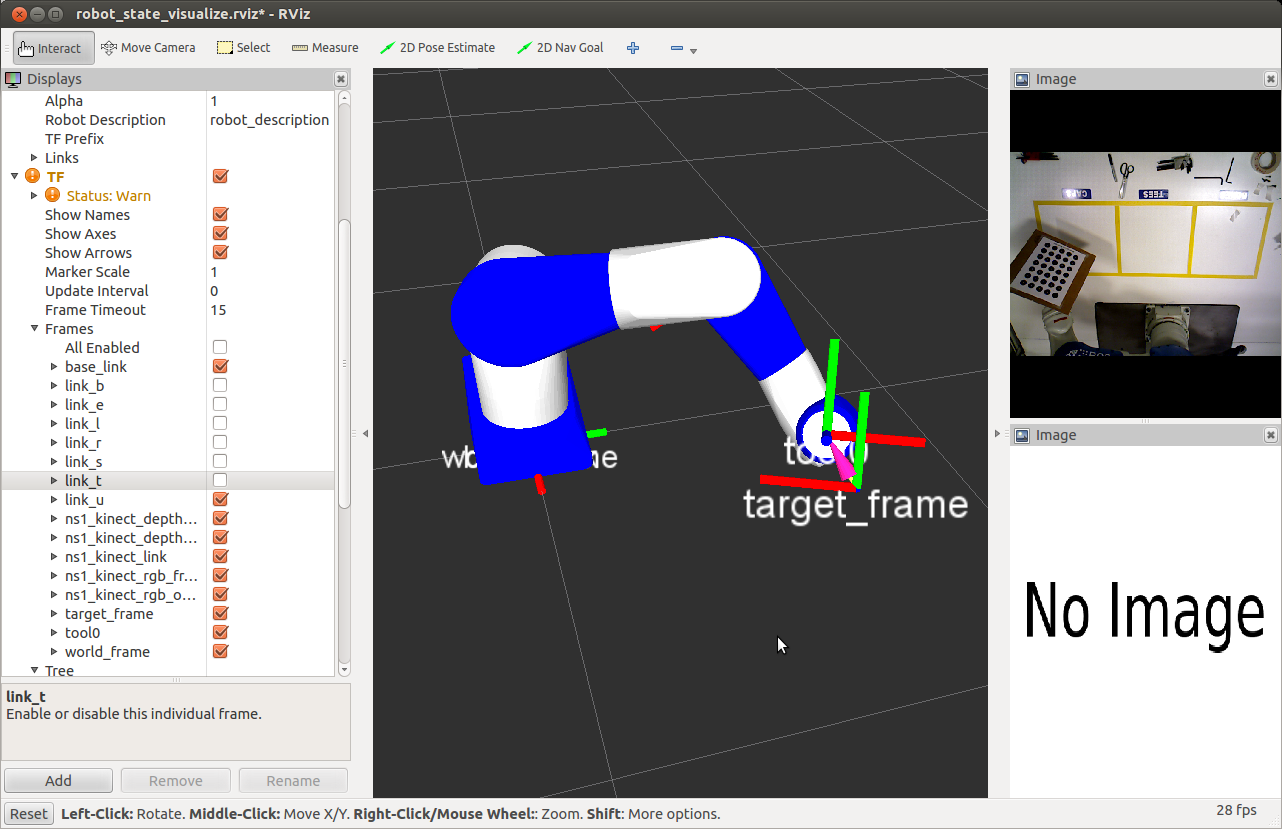

For the two cases covered in this tutorial, below are photos of the setup. The first set of pictures shows two Asus cameras viewing one 12x12 checkerboard. The second set of pictures shows one Asus camera viewing one circle grid which is attached to a robot.

Establish Correct transforms for static publishers

In order for this routine to work correctly the location and orientation of the target must be known and oriented properly. In the case of camera/target, you can simply assume the target is at the origin, and you can run everything below as is. However in the case of the camera/robot you must have a known target location which is relative to the robot. The best way to verify that you've picked/created a correct transform for the target is to run rviz. You can either follow instructions for the gui (launch cell_calibration.launch) or follow the first three steps (5.2.1, 5.2.2, 5.2.3) of the terminal command instructions and view the robot to target frame published on rviz. The target_frame should be located at a corner circle, it should be oriented with the z-axis (blue axis in rviz) is oriented away from the camera, and the x and y axes are oriented such that the circles are all in the positive quadrant. If you are using the calibration plate the target_frame should be at the known distances mention in Section 7.4. Follow the instructions to see results.

Instructions

Using RQT GUI

The fastest way to use this calibration tool (if you are performing camera to robot calibration) is to launch the rqt calibration gui; example video here. The gui is located in the hydro branch, not the hydro-devel branch of the repo. To run the gui, launch the cell_calibration file, and hit the calibrate button.

roslaunch industrial_calibration_gui cell_calibration.launch

If you are having trouble with the gui display, it may be a perspective issue - you may have to run the above command with "display_lock:=false" at the end in order to try to fix or import the perspective.

If you are having trouble viewing things in rviz it is likely that the .rviz config is not configured to display the useful objects for this tutorial. There are two solutions: 1) save over the default .rviz config file or 2) make the change outlined here. See below for details on what rviz types should be displayed, or use the .rviz file in this tutorial package (under config).

Terminal Commands (Step by Step Explanation)

Broadcast static transform(s)

First start up the a static_tf_broadcaster which will broadcast transforms from the reference frame in the cal_job.yaml file to the target frame from the target.yaml file. In the first case with two cameras, and one target (no robot) the launch fille will broadcast a transform from the reference frame (world_frame) to the target frame (target_frame). In the case of one camera, one target and a robot, the launch file will broadcast one transform from the reference frame (world_frame) to the robot parent link (base_link) and a second transform from the robot end effector frame (tool0) to the target frame (target_frame). NOTE: the tool0 to target_frame transform will vary for each setup/robot, you will have to change this for your setup to work; see section 4.1 for more details.

For 2 cameras, 1 target:

roslaunch industrial_extrinsic_cal world_to_target_tf.launch

For for 1 camera with robot:

roslaunch industrial_extrinsic_cal world_to_robot_tf.launch

Start Camera(s)

Basically use openni_launch, but this package/tutorial has renamed links and remapped topics. For 2 cameras, 1 target:

roslaunch industrial_extrinsic_cal cameras.launch

or for 1 camera with robot:

roslaunch industrial_extrinsic_cal camera.launch

Run Visualization

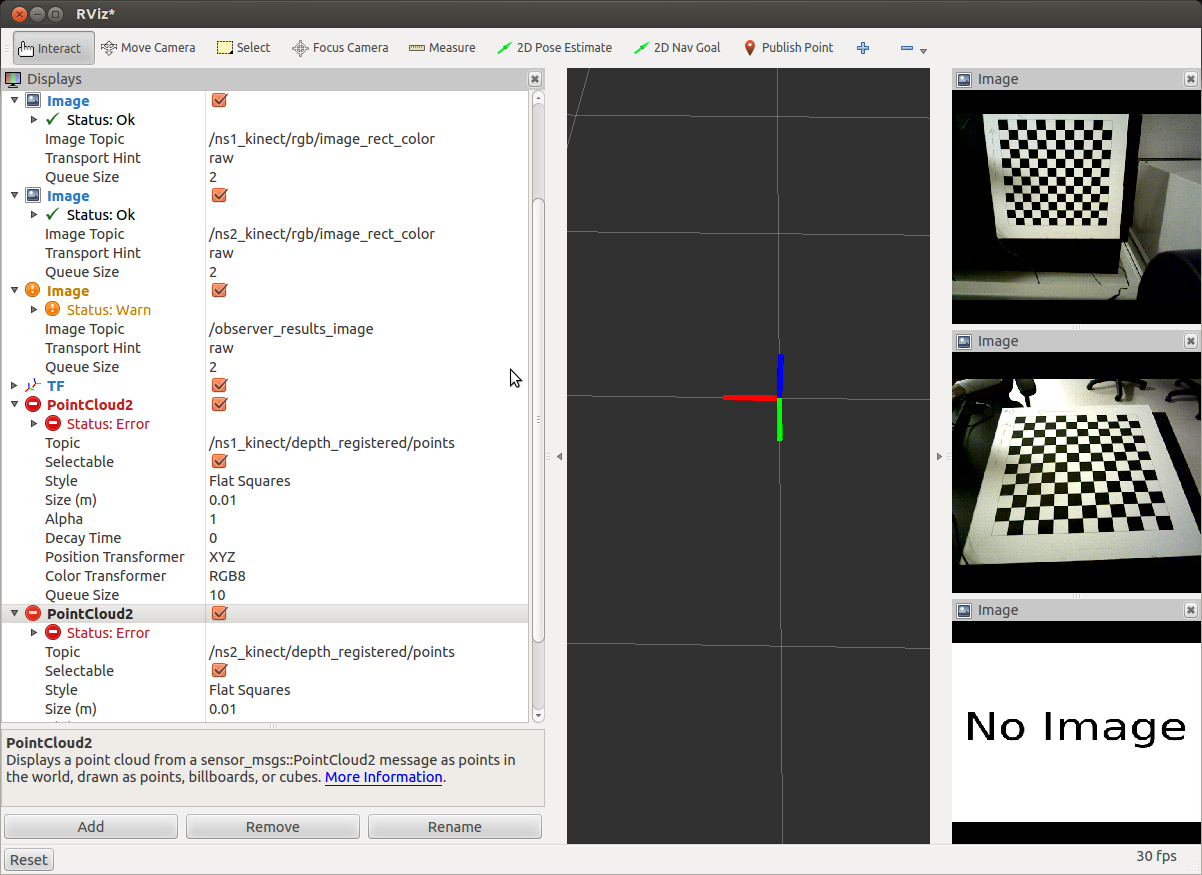

If you'd like to see what's happening, run rviz, with or without a robot and subscribed to PointCloud2(s) and/or Image(s). For 2 cameras, 1 target:

roslaunch industrial_extrinsic_cal visualize_cal.launch

Useful image topics which you can subscribe to: ns1_kinect/rgb/image_rect_color ns2_kinect/rgb/image_rect_color observer_results_image Useful PointCloud2 topics to which you can subscribe: ns1_kinect_depth_registered/points ns2_kinect_depth_registered/points TF's you should see: world_frame, target_frame, all kinect frames. Note: PointCloud2's will not be publishing because a transform does not yet exist between the camera and anything else in the world.

For for 1 camera with robot:

roslaunch motoman_sia10d_support robot_state_visualize_sia10d.launch robot_ip:=123.456.78.9 controller:=fs100

Useful image topics which you can subscribe to: ns1_kinect/rgb/image_rect_color, observer_results_image. Useful PointCloud2 topics to which you can subscribe: ns1_kinect_depth_registered/points. TF's you should see: world_frame, target_frame, all robot frames, all kinect frames. Note: PointCloud2's will not be publishing because a transform does not yet exist between the camera and anything else in the world.

Run Calibration

Running Calibration Node

Currently when you run the calibration node, the yaml files which describe a 2 camera, 1 checkerboard 11x11 target system are: test2_camera_def.yaml, test2_target_def.yaml, test2_caljob_def.yaml.

roslaunch industrial_extrinsic_cal calibration_node.launch

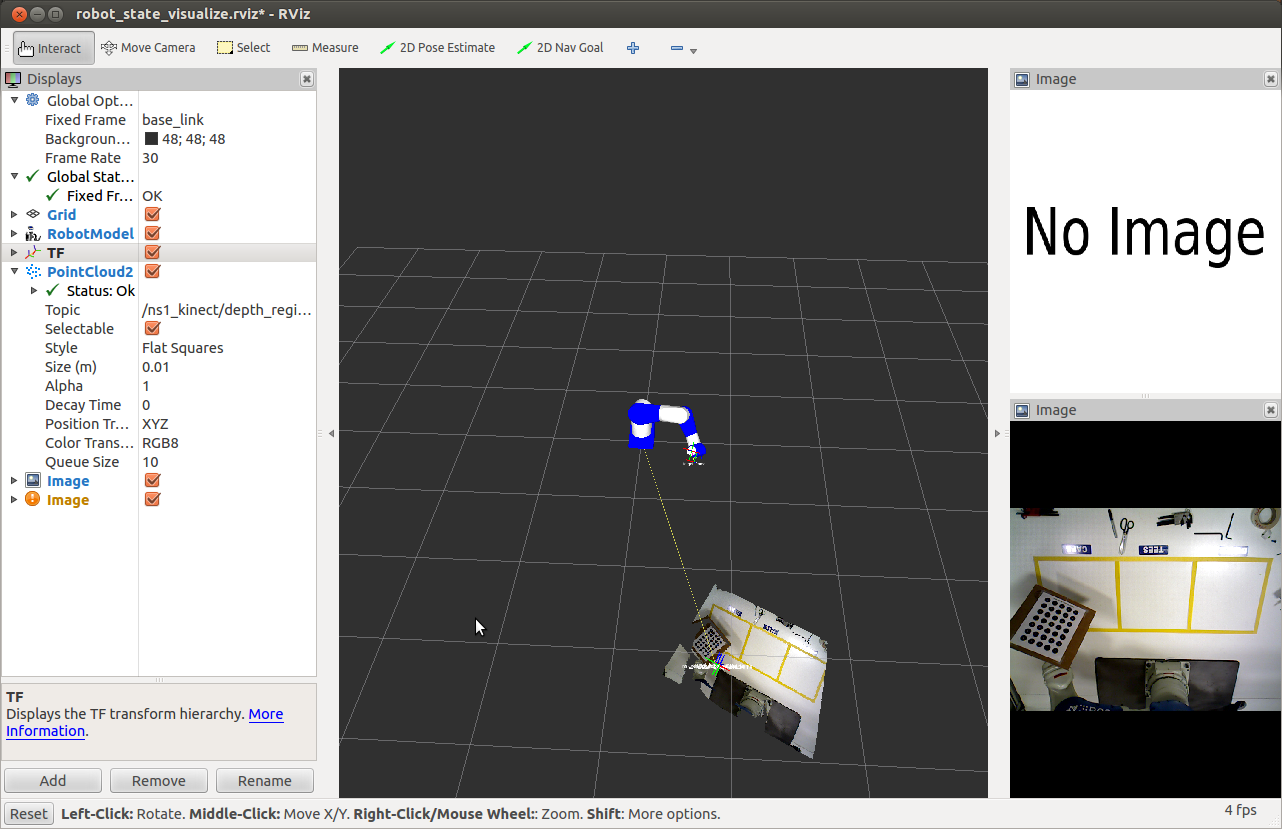

Once the calibration node has completed calibration, the PointCloud2 will start to publish the calibrated results. Note: sometimes you cannot see the Point Cloud; this is because the transform is only broadcasted once. Run the program again and results should appear.

Running Calibration Service

Currently when you run the calibration service, the yaml files which describe a 1 camera, 1 circle grid 5x7 target, 1 robot system are: test1_camera_def.yaml, circlegrid5x7_target_def.yaml, test1_caljob_def.yaml.

roslaunch industrial_extrinsic_cal calibration_service.launch

Once this service is started, the PointCloud2 will start to publish the initial guess at the camera extrinsics which comes from the camera_definition.yaml file. In a separate terminal window:

rosservice call /calibration_service

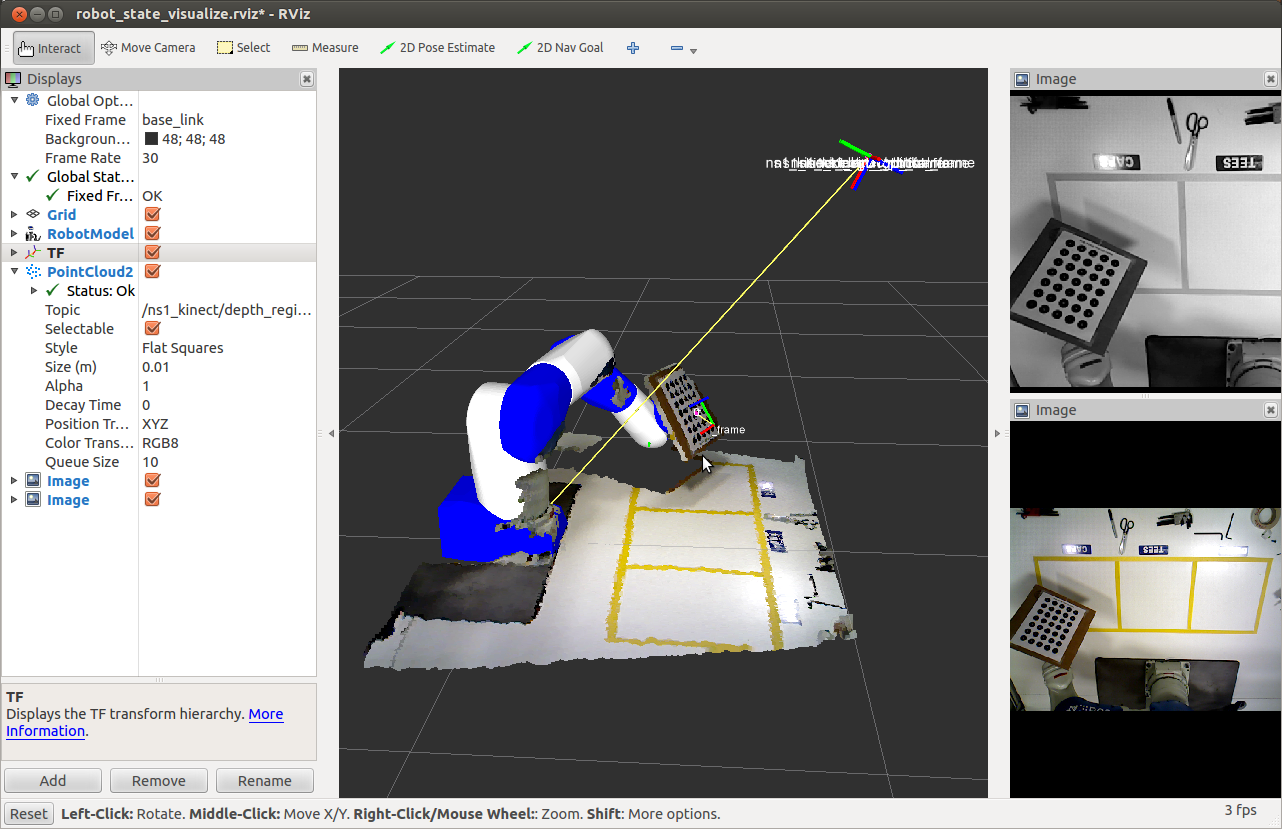

Once the service is called, the calibration will be run and the PointCloud2 will start to publish the calibrated results.

Results

In both cases a launch file is created and saved for future use. It launches a static_tf_transform node with the transform information from the reference frame (world_frame) to the camera frame (ns1_kinect_link).

Images of the rviz screens are below (pre-calibration, and post-calibration). For the 2 camera 1 target setup, you will have no PointCloud2 data until the node is complete.

For the Camera Robot setup, a PointCloud2 will appear at the startup of the service node (pictured below) - it will be off from the real world as it comes from the camera_definition.yaml file.

Below is a side by side of the real world setup and the calibrated results in rviz.

Variations

No robot, but want to try camera to robot cal

Say you'd really like to see the calibration work with a robot, except that you lack a robot. You can run the 1 Camera variation described above, but the only difference would be the third step, running the visualization. Instead of running robot state visualize, run:

roslaunch motoman_sia10d_support test_sia10d.launch

This would of course work with any robot type.

Different Targets

As mentioned previously, there are currently 7 targets in the repo: three variations on the checkerboard (same checkers (0.040m squares), one is an 7x6 (checkerboard6x5_target_def.yaml) and one is 9x9 (checkerboard8x8_target_def.yaml), the one used in this example which was 12x12 (test2_target_def.yaml). There are 4 variations on the circle grid, all using 25 mm circles at 35 mm spacing: two 7x5 grids (test1_target_def.yaml and circlegrid7x5_target_def.yaml) a 5x7 grid (circlegrid5x7_target_def.yaml) and a 7x7 grid (circlegrid7x7_target_def.yaml). You may of course print a target of your own making and create a corresponding target.yaml file. A helpful tool that still needs to be developed is a target yaml file generator. It is quite tedious to create these target files by hand, but that is currently the only option.

Implement your own Observer

The observer implemented in these examples was the ROSCameraObserver. It should work for any ROS compatible cameras. This ROSCameraObserver class inherits from the more generic CameraObserver class which has pure virtual functions (addTarget, triggerCamera, etc.). A different type of observer can be created for different sensors, and can use the CameraObserver as the base class. What is returned from the observer is a set of x and y points which have been observed by the camera and correspond to a specific target.

Using the Calibration Plate

Within the targets directory there is a pdf drawing as well as a SolidWorks part model and drawing of a Calibration plate. This tutorial was written before the plate arrived at our facility. The plate is sized/designed to be used with the Calibration Targets (circle grid) also available in the targets directory. The four holes on the rectangular face of the plate align with the four corner circles of the circle grid. The mounting holes on the plate are designed to fit: Motoman SIA20d, ABB IRB2400, Universal Robots UR5, and Fanuc R2000. The intended use of the calibration plate is to mount onto the robot end plate and orient the arm in the normal operation orientation (i.e. end effector facing downwards for a pick and place application) with the target facing upwards towards the camera system (so the z offset from the robot end plate to the target frame should be zero, not the thickness of the plate).

With a calibration plate made to the specification outlined in the drawing, there should be a known distance from the robot end effector transfrom (typically tool0) to one of the corner circles on the target. The circle grid target should be aligned such that the four holes are exactly aligned with the four corner circles. The known distances to the corner cirlces (first set at +/- 70mm and 120 mm, second set at +/- 70mm and 330 mm) helps establish a "robot to target" transform that can be used in a static transformer to publish the target_frame. (See section 4.1)